Probability Distributions

February 25, 2018

1. Idea

In a previous post, I explained Bayes Theorem and the way it works. In this post, I will try to sketch some basic information about probability distributions and their features, as they represent a cornerstone in Bayesian inference.

From its name, a probability distribution is a function, explains a distribution of different possible probabilities of a given hypothesis.

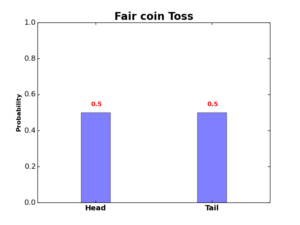

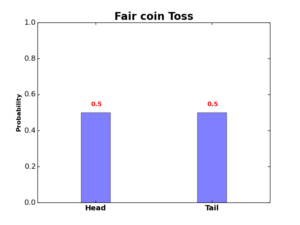

For example, if I ask you to guess the outcome of tossing a fair coin, what will be your answer? As you do not know the result yet, you may guess the outcome will have equally likely chance to be a head or a tail. Great! your guess represents a probability distribution called Binomial distribution, and it is visualized in the below figure.

Note here that the summation of all possible probabilities of the above experiment is equal to 1. I.e.:

\begin{equation}

P(Head) + P(Tail) = 1

\end{equation}

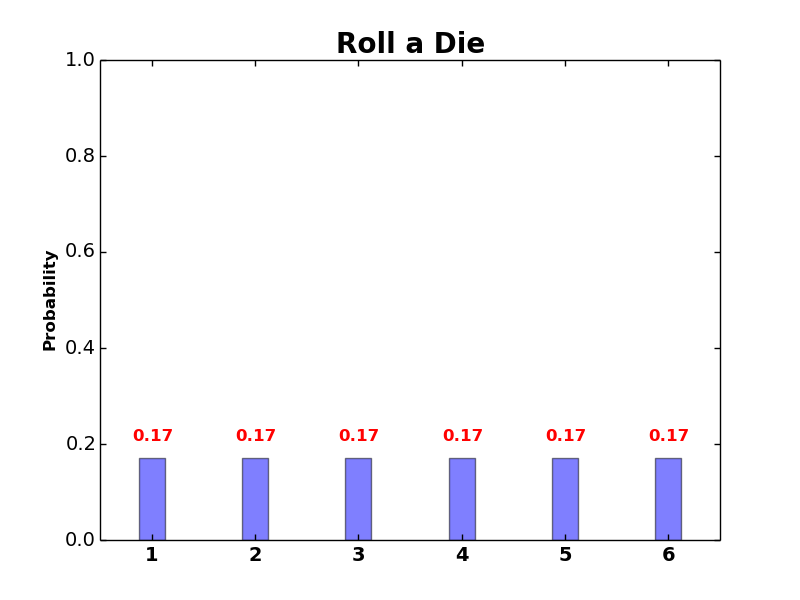

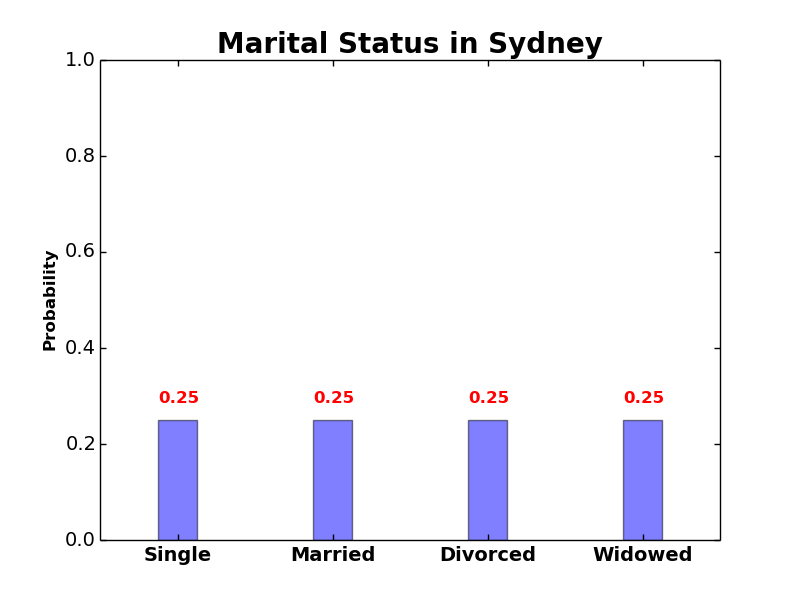

Similarly, we can predict the outcome of rolling a die, or the marital status of a woman in Sydney.

Note here that all of the above distributions are probability distributions, as long as each distribution’s possible values summing up to 1.

2. Significance

Probability distributions are extremely important in data science. In the following subsections, I will try to list some popular usages of them.

2.1 Probability Distributions Are Functions

The prime importance of probability distributions is that they are very well-formed functions. As you can imagine, the basic mission of statisticians and/or data scientists is to find functions (i.e. relationships between data variables). Therefore, and because probability distributions are well-defined functions, with different characteristics and features that have been studied for decades, they considered as a fundamental way of representing, analyzing, and visualizing data variables.

2.2 Expressing Uncertainty

The second significance of probability distributions is that each of them can mathematically express our uncertainty about an event, experiment, or hypothesis. For example, if you toss a coin, you can not know the result (Head or tail) before the coin lands. Nevertheless, you can draw all possible outcomes, each with certain probability, using a probability distribution. The following figures shows the difference between uncertainty and certainty.

Uncertainty

100% Head

Certainty

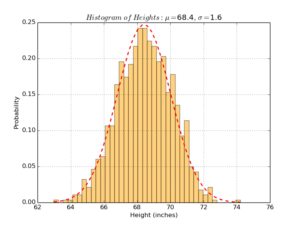

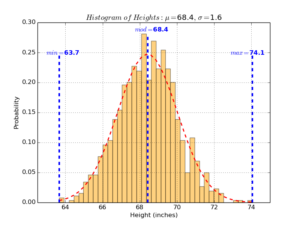

As another example, suppose you have a list of heights (in inches) of random sample of students in a high school: \(heights=\{65.78, 71.52, 69.40, 68.22\}\), and you want to model these observations using a continuous function. The first logical way of thinking is to assume the heights vector is following a normal probability distribution, and compute both mean and variance of the sample data to visualize your probability function:

Expressing uncertainty plays basic role in machine learning, as it enables us to make predictions of events which haven’t happened yet (e.g. earthquakes).

2.3 Exploring Statistics

The third benefit of probability distributions is that once you define the distribution your data is drawn from, you can easily find interesting statistics (or facts) about your data. For example, given the above heights example, once you found a probability distribution (e.g. normal distribution) to express it, you can answer questions like: what is the maximum height? minimum height? variance between heights? most common height? and so on.

2.4 Data Imputation

Another common application of probability distributions is to impute (i.e. fill-in) missing values in your data. Suppose for example you got a vector of heights, but with some missing values:

\begin{equation} heights=\{65.78, 71.52, ?, 68.22, ?, 68.70, ?, 70.01\}\end{equation} A common technique to fill-in these missing values is to draw these missing points multiple times using one probability distribution with different parameter settings, analyze multiple versions of your data, and finally integrate all versions into one final version (maybe with minimum variance or narrowest confidence interval). This technique is called multiple imputation, and it is widely used to fill-in missing data values.

2.5 Making Predictions

The fifth usage of probability distributions is to make predictions of events and hypotheses, using our believes and/or some historical data. Common approaches of calculating predictions (or guesses) using probability distributions are Bayesian and Frequentist Inferences.

3. Components

There are multiple components of probability distributions, that outline their functionalities and applications. Anyone wants to use a distribution should learn about it’s specific components. In the following points, I will try to briefly cover some of the basic components of probability distributions.

3.1 Random Variables

A random variable is a numerical mapping of all possible outcomes of a random process, and a random process is a process we do not know it’s outcome until it occurs. For example, the random variable \(X\) of tossing a fair coin can be expressed as \(X=\{0,1\}\), where the outcome \(Head\) is mapped to 0 and the outcome \(Tail\) is mapped to 1.

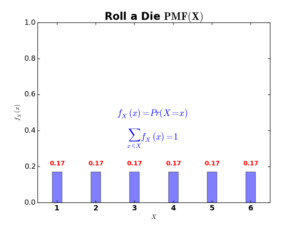

Another example is rolling a die, where random variable \(X\) can be represented by \(X=\{1,2,3,4,5,6\}\), with each side of the die is encoded to its equivalent numerical value in vector \(X\).

As we saw before (in logistic regression post), there are two basic types of data variables: categorical (discrete) and quantitative (continuous). There are also two corresponded types of random variables: continuous and discrete random variables. Each type of random variables is represented by a specific class of probability functions.

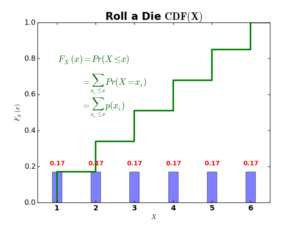

3.2 Probability Mass Function (PMF)

Probability Mass Functions (PMF) are functions that describe probability values of discrete random variables \(X\). PMF(\(X\)) must sum up to 1 for all possible values of \(X\).

For example, if we compute the PMF of rolling a die, where random variable \(X=\{1,2,3,4,5,6\}\), we can draw PMF(\(X\)) as follows:

\begin{equation}

f_X(x)=Pr(X= x)\ \ \ and\ \ \ \sum_{x\in X} f_X(x)=1\tag{1}

\end{equation}

A probability mass function (PMF) computes the probability that a random variable \(X\) can take any possible outcome \(x\in X\). In dice example, \(f_X(x=3)=0.17,\ f_X(x=1)=0.17,\ f_X(x=7)=0\). Note that the sum of all possible values of \(x\) equals to 1.

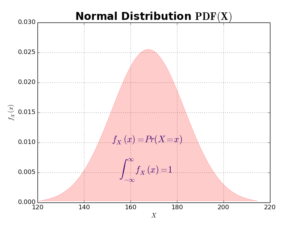

3.3 Probability Density Function (PDF)

As PMF describes probability values of discrete random variables, the probability density function (PDF) describes probability values of continuous random variables. An example of PDF is the one of normal distribution below: Please note that for PDFs, the area under curve must sum up to 1.

Please note that for PDFs, the area under curve must sum up to 1.

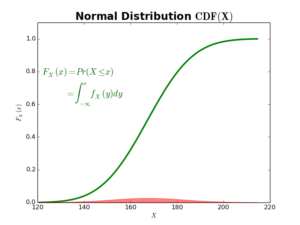

3.4 Cumulative Distribution Function (CDF)

Beside PMF and PDF, there exist a third type of functions for both discrete and continuous variables. These are cumulative distribution functions (CDF). The CDF computes the cumulative probability up to a given value \(x\in X\). In case of discrete random variable, the CDF looks like this:

The green stepwise function above represents the CDF of rolling a die, and it is computed as follows:

\begin{equation}

\begin{split}

F_X(x) &=Pr(X\leq x) \\

&=\sum_{x_i\leq x} Pr(X=x_i) \\

&=\sum_{x_i\leq x} p(x_i)

\end{split}\tag{2}

\end{equation}

You can think of \(F_X(x)\) as summing up all probabilities upto value \(x\). For example:

\begin{equation}

F_X(x=3)= \sum_{x_i=1}^3 p(x_i)= 0.17+0.17+0.17=0.51

\end{equation}

For continuous random variables, CDFs using integrals instead of summations, in order to compute the area under continuous PDF curves. The next figure shows an example of the normal distribution CDF.

\begin{equation}

\begin{split}

F_X(x) &=Pr(X\leq x) \\

&=\int_{-\infty}^x f_X(y)dy

\end{split}\tag{3}

\end{equation}

where \(y\) is a dummy integration variable.

Please note that for continuous random variables, we integrate PDF(\(x\)) over given limits (say \(\{a,b\}\)) to obtain CDF(\(x\)), and we differentiate CDF(\(x\)) with respect to \(x\) to obtain PDF(\(x\)).

4. Moments

Each probability distribution function is defined by a set of coefficients (called moments). These moments represent important statistics that can be computed out of mass/density functions. The most important moments of probability distributions are listed below:

4.1 Expectation

The expectation \(E(X)\) of probability distribution is the average (i.e. mean) value of it’s random variable \(X\). It is also called the first moment. The term “expectation” is used here to express the value used to represent the whole distribution in probabilistic inferences. Other names of expectation are “point estimate” or “best guess“. It is noted as \(E(X)\) or \(\mu(X)\).

4.2 Variance

The variance \(V(X)\) of probability distribution expresses how far a set of random numbers \(x\in X\) are spread out from its expectation \(\mu(X)\). It’s also called the second moment. It is noted as \(V(X)\) or \(\sigma(X)\).

4.3 Precision

Precision \(\tau(X)\) is the reciprocal of the variance \(\frac{1}{\sigma(X)}\). It is used to measure how close a set of random numbers \(x\in X\) are to their average value \(\mu(X)\). High precision values usually used as indicator of good probabilistic models.

4.4 Median

The median value of a set of random numbers \(x\in X\) is the value that separates the higher half from the lower half of vector \(X\). For example, in dataset \(\{0,1,2,3,4,5,6\}\) the median value is 3.

4.5 Mode

The mode value of a set of random numbers \(x\in X\) is the most common (i.e. repeated) value in the data set. It is also the data point where PDF reaches its local (or global) maxima. For example, in dataset \(\{0,1,5,5,7,2,9,8,5\}\) the mode value is 5.